Quality begins on the inside…and then works its way out. — Bob Moaward

In his article “How Did My Data Quality Get So Bad?” Kevin Joyce, CMO at marketing automation SaaS provider Market2Lead said:

Dealing with bad data requires a mix of process, people and technology. The activities can be divided into those that prevent data decay, and those that detect data quality issues and subsequently help remediate those issues.

The Market2Lead Marketing Automation Technology has a terrific response management engine that validates addresses, rejects junk like asdf.com, suppresses public domain email addresses, normalizes data, and ensures all required fields have data in the required format.

But you still need to work on the People and Process to really get a handle on data quality because your Sales Reps and Client Services folks still get to put data directly into Salesforce.com and Garbage In means Garbage Out (GIGO).

It is important to note that Kevin has listed the type of data quality control functions that are fundamental to any marketing automation solution or Software-as-a-Service (SaaS). Marketers who do a lot of emailing want to eliminate email duplicates, hardbounces.

It is important to note that Kevin has listed the type of data quality control functions that are fundamental to any marketing automation solution or Software-as-a-Service (SaaS). Marketers who do a lot of emailing want to eliminate email duplicates, hardbounces.

In the case of B2B marketing, they wish to avoid contacts who use public email services like Hotmail, Yahoo and Gmail. All this is something Market2Lead has recognized as critical enough to incorporate in its marketing automation platform as a service offering.

However, there is more to data quality control than these basic services. He alludes to it when saying that, to get a real handle on data quality, more resources are required in terms of skill set and process rules to achieve data cleanliness.

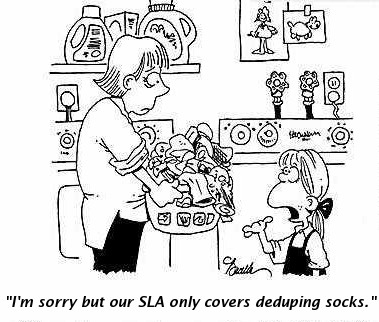

He couldn’t be more right. This is especially applicable when running operations under a SaaS model. But first, let’s work out some definitions regarding what to think of when you hear the term ‘data cleaning’.

I Thought You Said You’d Clean My Data?

- Data cleaning in B2B requires that work be done on the data programatically across at least 4 dimensions of quality through which all data must course: a) Timeliness, b) Accuracy, c) Completeness and d) Consistency.Together they produce Integrity.

- We’re all after data integrity as the final definition of cleanliness.

- The emphasis initially is on programmatic (machine) work not on manual (human) work.

- Machines produce semi-validated output to be wholly validated by human action.

So now, let me elaborate a bit on each of the above to make more clear what I’m talking about.

- What you as a marketer might conventionally call ‘data cleaning’ is what a data quality control technician would refer to by many procedural names, such as data formatting, standardizing, consolidating, casing, matching, deduping, fusing, enhancing and validating.

- Note that

because there are many procedures through which data are run to ‘clean’ it, there is inherently in every discussion between Marketing and IT a high probability of misunderstanding each other

- .

- This misunderstanding will last unless a distinction is acknowledged in conversation between what the final result (a “clean” batch of data) is vs. the work (many procedures) that it takes to produce that result.

- I make emphasis on this because data might run through many software procedures and arrive to the highest state of technical cleanliness possible to produce with a machine.

- And yet a data user might review the final result only to disagree with that assessment. That’s because machines do not know what youre going to use your data for and that final usage is the key determinant of utmost quality.

- This is why I discuss data cleanliness in terms of 4 dimensions:

- Timeliness: Are the data as fresh or as aged for your purposes as you need it?

- Accuracy: Are the data as precise for your purposes as you need it?

- Completeness: Are the data as comprehensive for your purposes as you need it?

- Consistency: Are the data as reliable for your purposes as you need it?

- As I said, a technical team could produce programatically a high degree of cleanliness across each of these dimensions when compared to the original condition in which the data arrived, and yet that degree might not match the user’s expectations of quality for data usage.

- This is why

there should always be a manual validation step that must be undertaken in the process of cleaning data programatically

- to ensure achievement of the highest degree of confidence about its quality.

- This validation step is the last step in the cleansing process and is done by hand. Its also the most expensive step and must be applied very selectively to the most important segments of the machine output.

Can All This Data Quality Control Work Be Done In A Cloud?

It takes a team not just tools to clean up marketing and sales data. It also takes a rather wide range of skills and knowledge about not only what the data represent but also what they’re meant to enable for the business.

In short, it takes a team who will get intimate with data integrity. It must also be a team who can become proactive in preventing poor data quality.

This team must set up point-of-entry data quality control rules and applications, because down the stream these data entry controls will save the business more money and headaches than having to continue cleaning a pool of garbage data.

This team must set up point-of-entry data quality control rules and applications, because down the stream these data entry controls will save the business more money and headaches than having to continue cleaning a pool of garbage data.

For example, conducting address validation at the point of entry leads to fewer returned mail costs down the stream.

The future lies in the development of more sophisticated on-demand data integration and data quality control software offerings that could link on-premises data systems with marketing and sales SaaS solutions through web-based interfaces. These data quality control SaaS solutions should cover more than what Kevin Joyce had mentioned.

These SaaS solutions should be able to enforce data governance and complement a master data management (MDM) model for resolving contact and account identity conflicts across disparate systems, and support data profiling and data quality scoring and tracking, since it is through the soundness of data quality that accurate and reliable metrics can be produced to help the business execute on new services and opportunities.

If all this does not seem to fit with your concept of SaaS marketing and process automation, keep the context in mind.

Most B2B marketing executives lament the status of their databases, but have difficulty convincing senior management of the gravity of the problem,” says Jonathan Block, SiriusDecisions senior director of research.

The amount of data in the average B2B organization typically doubles every 12 to 18 months, so even if data is relatively clean today, it’s usually only a matter of time before things break down. The longer incorrect records remain in a database, the greater the financial impact.

This point is illustrated by the 1-10-100 rule: It takes $1 to verify a record as it’s entered, $10 to cleanse and de-dupe it and $100 if nothing is done, as the ramifications of the mistakes are felt over and over again.

A data quality control SaaS solution would enable an economic way for you to experiment with your other marketing and sales automation SaaS solutions to evaluate the monetary impact of poor data on your bottom line. Look into it.

Some strong players like Informatica and Acxiom are making in-roads. Other data quality control providers like Purisma are also worth a peek. Smaller businesses can rely on boutique data quality control service providers, such as this one.

Return to Data Quality Objectives from SaaS Data Quality Control